Before optimizing content for AI search, technical foundations must be solid. AI systems can't cite content they can't access, parse, or understand. A comprehensive technical audit identifies barriers preventing AI visibility and prioritizes fixes by impact.

This checklist covers every technical factor affecting how AI crawlers discover, process, and evaluate your website.

AI systems use dedicated crawlers with specific behaviors. Standard SEO crawler audits miss AI-specific issues.

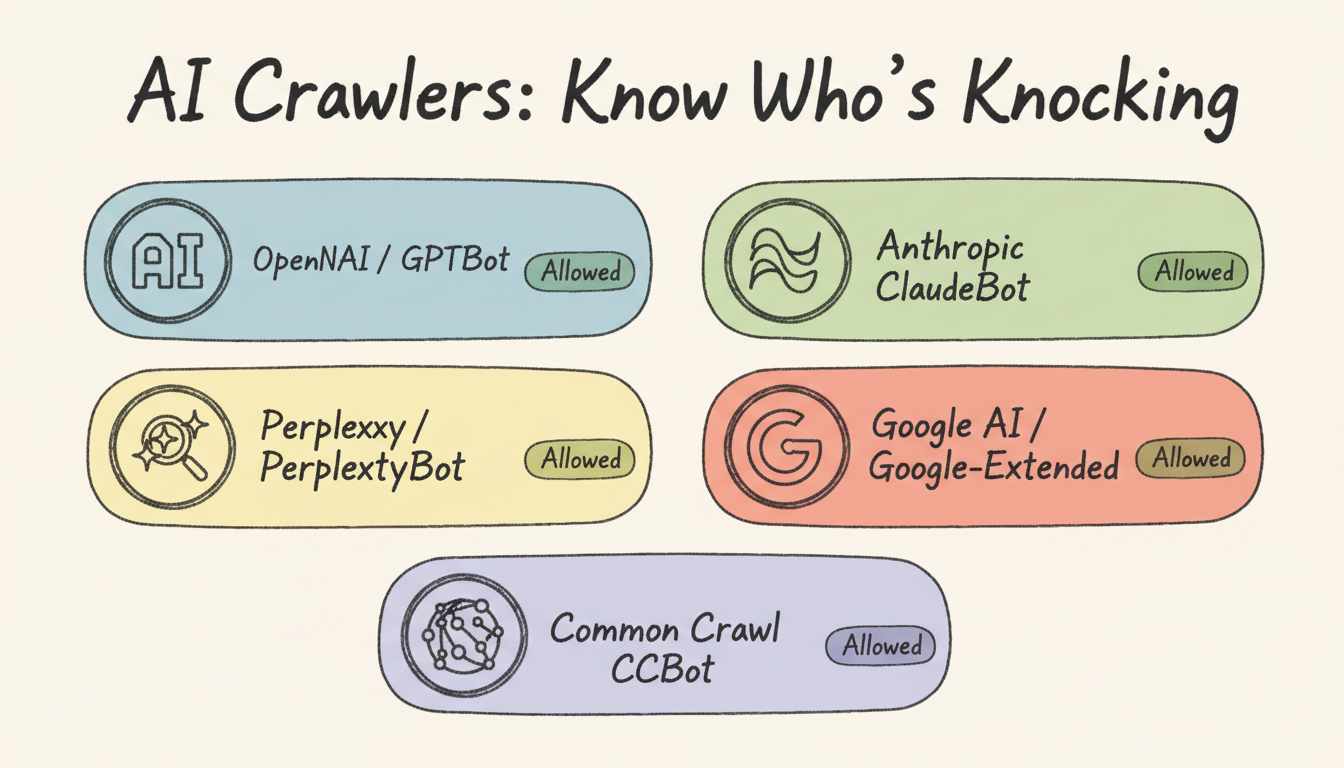

Check for each AI crawler:

Crawler | User-Agent | Check Status |

OpenAI | GPTBot | Allowed / Blocked / Missing |

Anthropic | ClaudeBot | Allowed / Blocked / Missing |

Perplexity | PerplexityBot | Allowed / Blocked / Missing |

Google AI | Google-Extended | Allowed / Blocked / Missing |

Common Crawl | CCBot | Allowed / Blocked / Missing |

Audit steps:

Common issues found:

AI crawlers may receive different responses than browsers.

Test methodology:

curl -A "GPTBot" -I https://yourdomain.com/target-page

curl -A "ClaudeBot" -I https://yourdomain.com/target-page

curl -A "PerplexityBot" -I https://yourdomain.com/target-pageResponse codes to check:

Code | Meaning | Action Required |

200 | Success | None |

301/302 | Redirect | Verify destination accessible |

403 | Forbidden | Check WAF/security rules |

429 | Rate limited | Adjust rate limiting |

5xx | Server error | Investigate server issues |

Security system audit:

Evaluate how efficiently AI crawlers can access your content.

Factors to assess:

Factor | Good | Poor | Priority |

Average response time | <500ms | >2000ms | High |

Crawl depth to content | 1-3 clicks | 5+ clicks | Medium |

Internal linking density | Multiple paths | Orphan pages | High |

XML sitemap coverage | 100% indexed pages | <80% | High |

Schema markup provides machine-readable context AI systems use for extraction and citation. Understanding schema markup knowledge graph relationships is crucial for effective implementation.

Audit each page type:

Page Type | Required Schema | Optional Schema | Status |

Homepage | Organization | WebSite, BreadcrumbList | Check |

Blog posts | Article | FAQPage, HowTo | Check |

Product pages | Product | Review, Offer | Check |

Service pages | Service | FAQPage, LocalBusiness | Check |

FAQ pages | FAQPage | - | Check |

Testing sequence:

Common schema errors:

Error Type | Impact | Detection Method |

Invalid JSON syntax | Complete failure | JSON validator |

Wrong @type | Misinterpretation | Schema validator |

Missing required fields | Reduced visibility | Rich Results Test |

Duplicate conflicting markup | Confusion | Manual inspection |

Incorrect nesting | Parsing errors | Structured data testing |

Beyond syntax, evaluate semantic quality.

Quality factors:

AI systems must access and parse your content directly.

Content hidden behind JavaScript may be invisible to AI crawlers.

Testing process:

JavaScript dependency matrix:

Content Element | Server-rendered | Client-rendered | Priority Fix |

Main body text | ✓ Required | High risk | High |

Headlines (H1-H6) | ✓ Required | High risk | High |

FAQ content | ✓ Required | Medium risk | Medium |

Navigation | Preferred | Lower risk | Low |

Comments | Optional | Acceptable | Low |

Verify AI systems can extract meaningful content.

Manual extraction test:

Accessibility factors:

Factor | Good Practice | Issues to Fix |

Text in HTML | Direct text content | Text in images |

Heading structure | Logical H1→H6 flow | Skipped levels, multiple H1s |

List formatting | Semantic / | Visual-only formatting |

Table structure | Proper markup | Tables for layout |

Information architecture affects how AI systems understand content relationships. When evaluating your technical foundation, consider the broader context of SEO vs AEO key differences in your overall strategy.

URL quality checklist:

Factor | Optimal | Suboptimal | Fix Priority |

Hierarchy | /category/subcategory/page | /p?id=12345 | High |

Keywords | /blog/aeo-optimization | /blog/post-123 | Medium |

Depth | 3-4 levels max | 6+ levels | Medium |

Parameters | Minimal | Multiple tracking params | Low |

Internal links help AI systems discover and contextualize content.

Audit metrics:

Metric | Target | Action If Below |

Links to priority pages | 10+ internal links | Add contextual links |

Orphan pages | 0 | Connect to relevant content |

Link anchor text | Descriptive | Update generic anchors |

Broken internal links | 0 | Fix or remove |

Structure assessment:

Site speed affects both crawlability and user experience signals.

Benchmark assessment:

Metric | Good | Needs Work | Poor |

LCP (Largest Contentful Paint) | <2.5s | 2.5-4s | >4s |

FID (First Input Delay) | <100ms | 100-300ms | >300ms |

CLS (Cumulative Layout Shift) | <0.1 | 0.1-0.25 | >0.25 |

TTFB (Time to First Byte) | <200ms | 200-500ms | >500ms |

Infrastructure checks:

Component | Check | Impact on AI |

Server location | Geographic distribution | Crawl speed |

CDN configuration | Edge caching | Availability |

Compression | Gzip/Brotli enabled | Efficiency |

HTTP/2 or HTTP/3 | Protocol support | Connection handling |

Technical security indicators contribute to authority assessment.

Factor | Required | Status |

HTTPS everywhere | Yes | Check |

Valid SSL certificate | Yes | Check |

HSTS enabled | Recommended | Check |

Mixed content issues | None | Check |

Security headers | Present | Check |

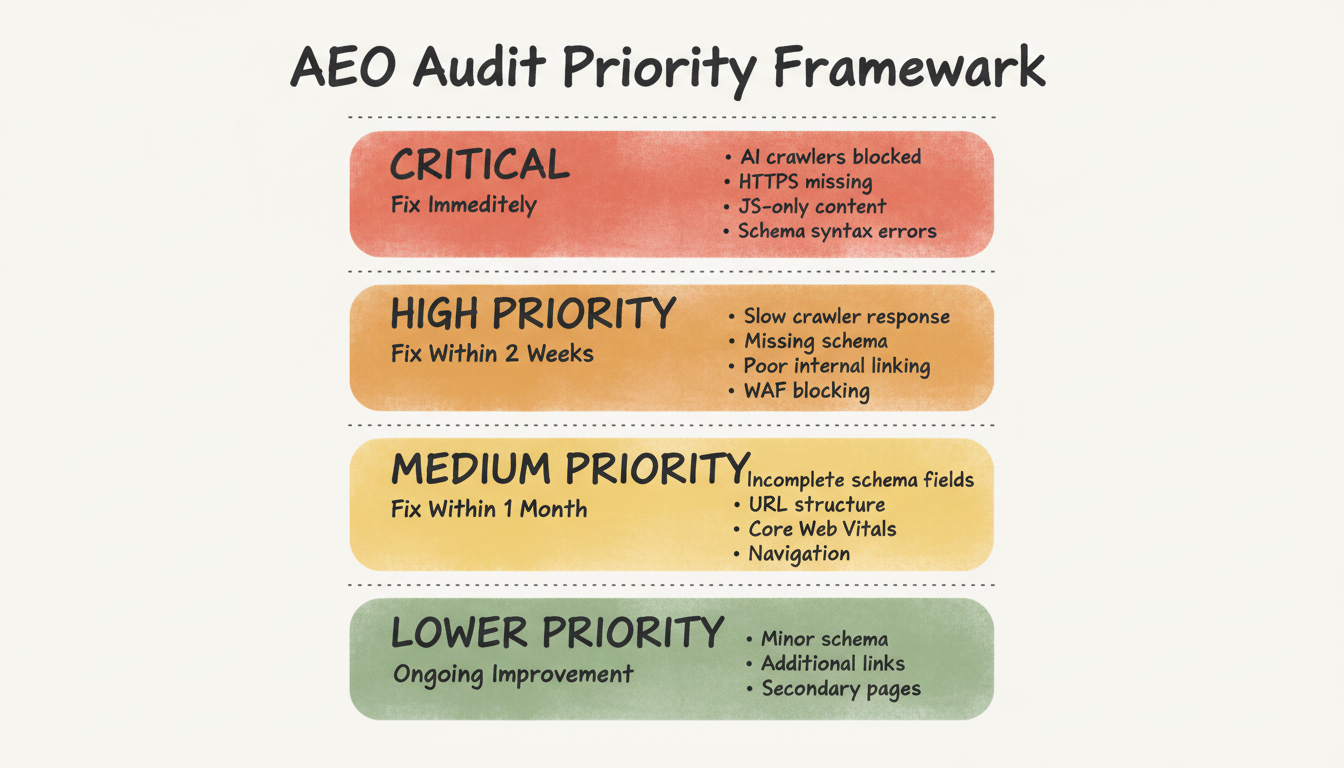

Not all issues require immediate attention. Prioritize by impact.

Convert audit findings into implementation roadmap. For comprehensive technical optimization guidance, review our AEO tools complete guide to select the right solutions for your needs.

Documentation template:

Issue: [Specific finding]

Impact: [Critical/High/Medium/Low]

Current State: [What's happening now]

Target State: [Desired outcome]

Implementation: [Specific steps]

Verification: [How to confirm fix]Conduct thorough AEO technical audits:

Technical audits reveal hidden barriers to AI visibility. Regular assessment ensures your site remains accessible as AI systems and your content evolve.

By submitting this form, you agree to our Privacy Policy and Terms & Conditions.