The Generative Engine Optimization paper published by researchers at Princeton University and Georgia Tech established the academic foundation for GEO as a legitimate discipline. Published in the KDD 2024 proceedings, this research introduced systematic methods for optimizing content visibility in AI-generated responses and documented measurable improvements from specific optimization techniques.

This guide examines the paper's key findings and explains their practical implications for marketers optimizing content for AI search platforms.

The Princeton GEO paper emerged as AI search platforms gained significant user adoption. ChatGPT, Perplexity, and Google's AI features were transforming how users discover information. Yet no systematic research existed on how content creators could improve visibility in these new environments.

Traditional SEO research focused on ranked search results. The GEO paper asked a different question: what makes content citation-worthy when AI systems synthesize answers from multiple sources?

The researchers developed the GEO framework—Generative Engine Optimization—as the formal methodology for addressing this challenge.

The paper documented several significant findings that continue shaping GEO practice.

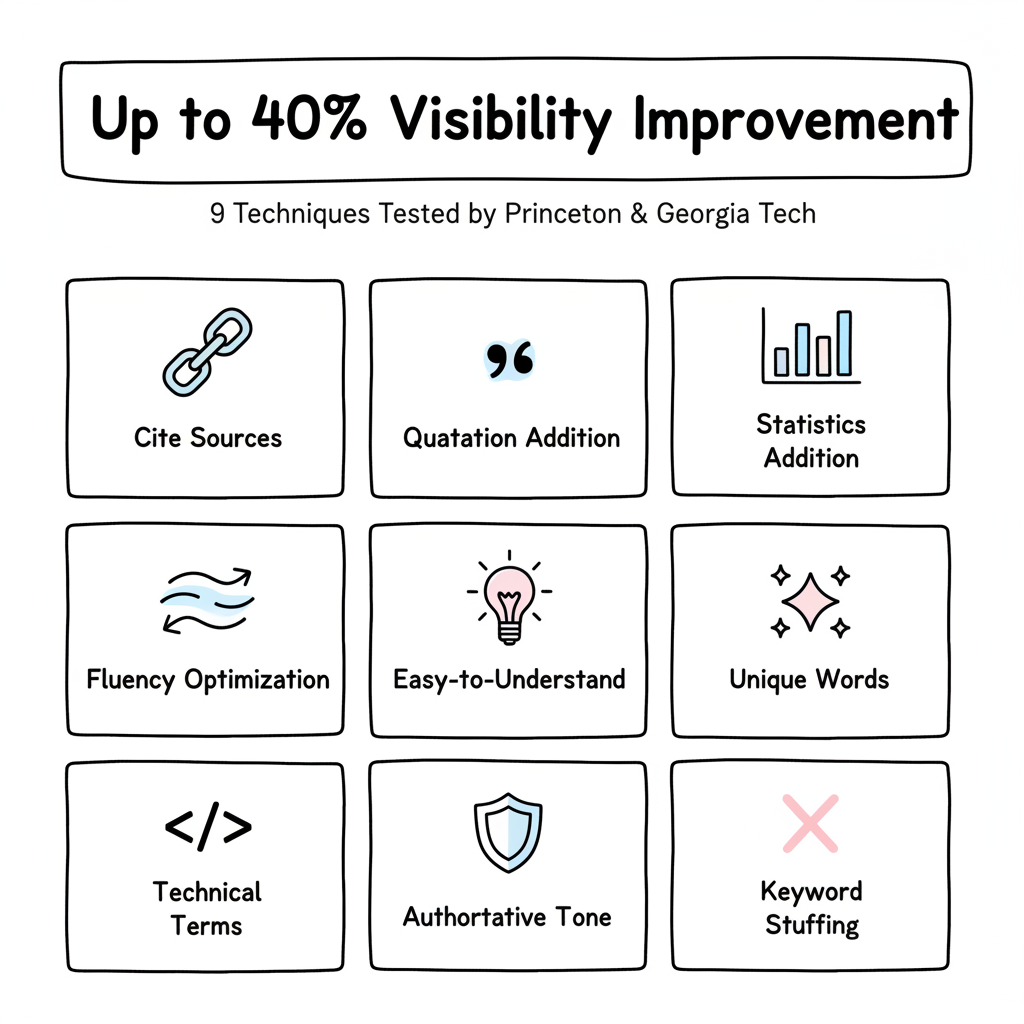

The headline finding: specific optimization techniques improved content visibility in AI-generated responses by up to 40%. This wasn't speculation—the researchers measured visibility changes across multiple AI platforms using controlled experiments.

The improvement varied based on technique and content type, but the overall finding validated that optimization efforts produce measurable results in AI search environments. Companies implementing enterprise AI SEO platforms have leveraged these findings to systematically improve their content's citation rates across generative engines.

The research tested nine distinct optimization approaches:

Different techniques performed better for different content domains. Technical topics benefited from statistical additions and authoritative citations. Consumer topics performed better with simplified explanations and quotations.

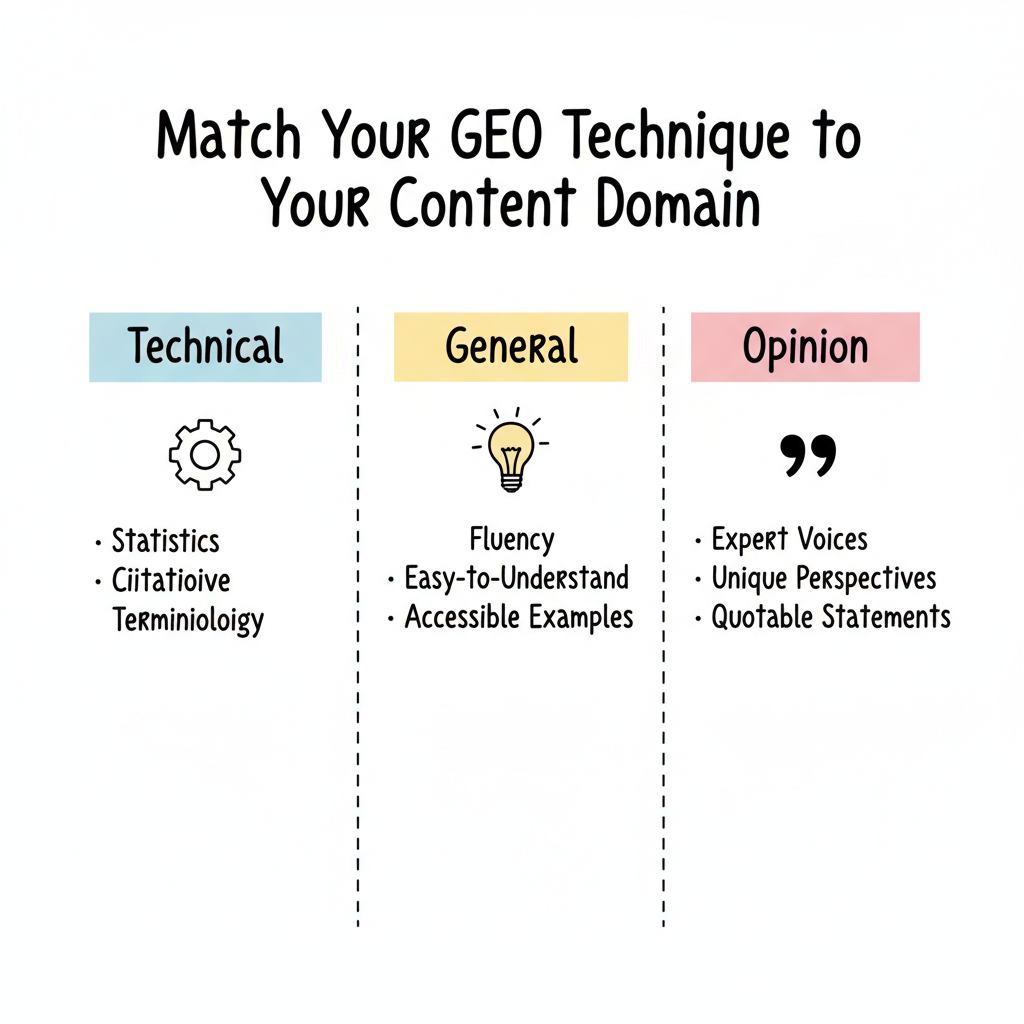

The paper revealed that optimization effectiveness varies significantly by content domain. No single technique works universally across all topics.

For scientific and technical content, citation additions and statistics produced the strongest visibility improvements. For general interest topics, fluency optimization and easy-to-understand approaches proved more effective. Organizations approaching AEO migration must consider these domain-specific patterns when transitioning their content strategy.

This finding emphasizes that GEO requires tailored approaches rather than universal formulas.

The research confirmed that AI systems evaluate source quality when selecting content to cite. Higher domain authority, established expertise signals, and consistent information patterns increased citation likelihood.

This aligns with Google's E-E-A-T framework (Experience, Expertise, Authoritativeness, Trustworthiness) but applies specifically to how AI systems select citation sources rather than how search engines rank pages.

Understanding the research methodology helps evaluate the findings' reliability.

The researchers created controlled experiments where they modified content using each optimization technique, then measured how frequently AI systems cited the modified versus original content when generating responses to relevant queries.

This controlled approach isolated the impact of specific changes rather than observing correlations in uncontrolled environments.

The research included multiple AI platforms to ensure findings weren't specific to one system. Different platforms showed varying preferences, but overall patterns remained consistent across systems.

Visibility was measured through citation frequency—how often content appeared as a source in AI-generated responses for target queries. This direct measurement captures the core GEO outcome rather than proxy metrics.

The research findings translate into actionable guidance for content optimization.

AI systems extract specific passages to cite. Content structured with clear, quotable statements performs better than dense, complex text that resists extraction.

Practical applications:

The citation and statistics techniques that improved visibility work because they add credibility signals AI systems recognize. Understanding schema validation AI search patterns helps ensure your structured data reinforces these credibility signals effectively.

Practical applications:

Since effectiveness varies by domain, match techniques to content type.

For technical content: prioritize statistics, citations, and authoritative terminology.

For general content: prioritize clarity, accessible explanations, and relatable examples.

For opinion content: prioritize expert voices, unique perspectives, and quotable statements.

The research tested keyword stuffing as a control and found it ineffective or counterproductive. AI systems evaluate content quality holistically rather than responding to keyword density.

This finding reinforces that GEO requires genuine quality improvements rather than gaming attempts.

The research established foundational knowledge while acknowledging limitations.

AI platforms continue developing. Techniques effective when the research was conducted may need adjustment as platforms update their selection criteria.

The principles—quality, authority, extractability—remain relevant even as specific implementation details evolve.

As more content creators implement GEO techniques, the competitive baseline shifts. Early adopters gain advantages that diminish as optimization becomes widespread.

This dynamic mirrors traditional SEO evolution—baseline optimization becomes table stakes while differentiation requires deeper quality investments.

Different AI platforms prioritize different signals. Content optimized for ChatGPT may perform differently on Perplexity or Google AI Overviews. Comprehensive GEO requires understanding platform-specific patterns, including copilot citation patterns for Microsoft's generative search features.

The Princeton GEO paper established academic legitimacy for AI visibility optimization. Before this research, GEO was speculation and anecdote. After publication, practitioners had documented evidence that specific techniques produce measurable improvements.

The 40% visibility improvement headline captured attention, but the deeper contribution was the systematic framework for understanding and measuring AI visibility optimization.

Subsequent research and industry practice build on this foundation, testing additional techniques and refining understanding of what makes content citation-worthy in AI search environments.

The paper was published in the KDD 2024 conference proceedings. Search for "Generative Engine Optimization" with authors from Princeton University and Georgia Tech. Academic databases and the conference proceedings contain the full paper.

The core principles remain relevant—quality signals, extractable structure, and authority indicators continue influencing AI citation decisions. Specific technique effectiveness may shift as platforms evolve, but the foundational framework continues guiding effective GEO practice.

Start with the techniques matching your content domain. For technical content, add citations and statistics. For general content, focus on clarity and accessibility. Structure all content with extractable statements AI systems can cite directly.

By submitting this form, you agree to our Privacy Policy and Terms & Conditions.