When you ask ChatGPT a question, it doesn't simply recall memorized information. When Perplexity delivers an answer with citations, it's actively pulling from the web in real-time. Understanding how these systems actually work reveals why certain content gets cited and other content gets ignored.

Here's the technology behind AI answer engines—and what it means for your visibility strategy.

Most answer engines rely on Retrieval-Augmented Generation (RAG), a framework that combines information retrieval with AI text generation. The RAG market reached $1.2 billion in 2023 and continues growing at nearly 50% annually as enterprises adopt it for AI applications.

RAG solves a fundamental problem: Large Language Models (LLMs) know a lot from training data, but they can't access your internal documents, yesterday's news, or the latest information. RAG connects LLMs to external knowledge bases at runtime, grounding responses in current, relevant data.

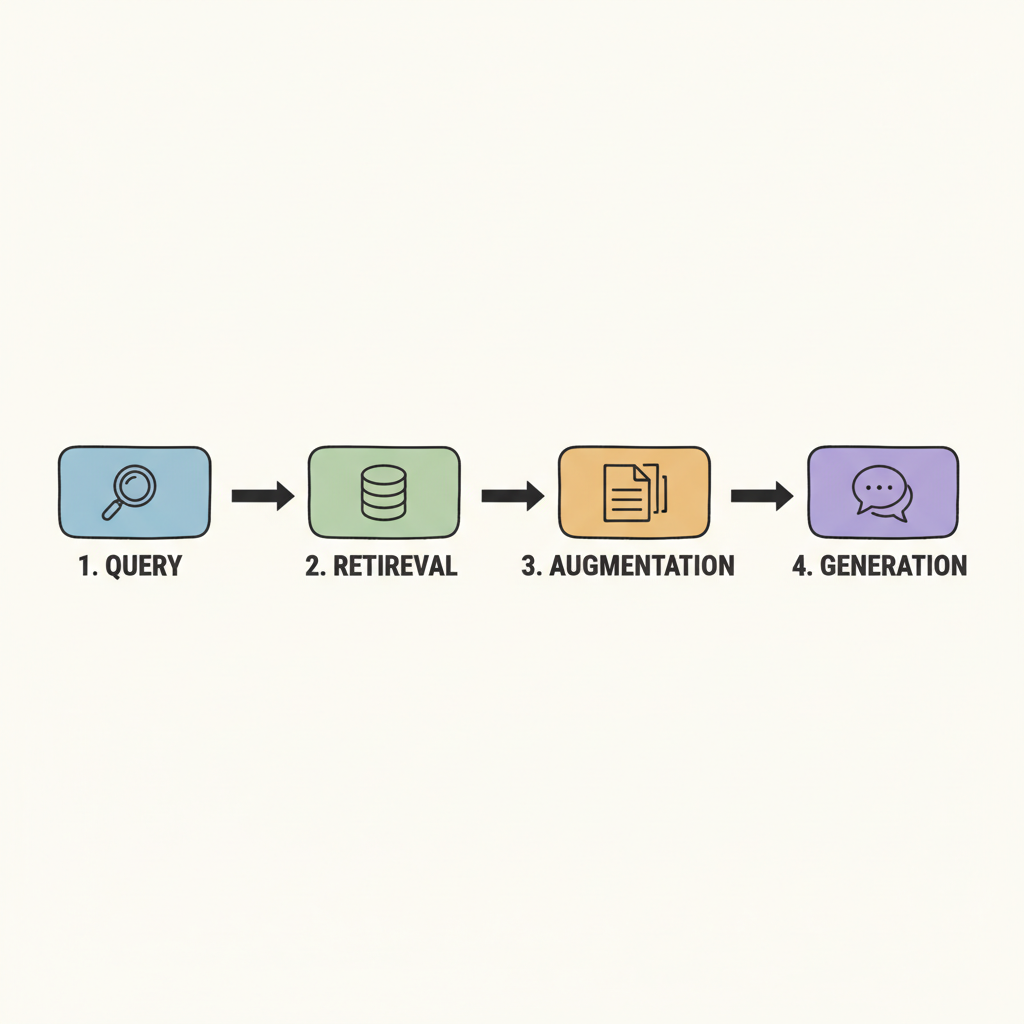

The basic RAG process:

This architecture explains why answer engines can cite current information—they're not relying solely on what the model "remembers" from training.

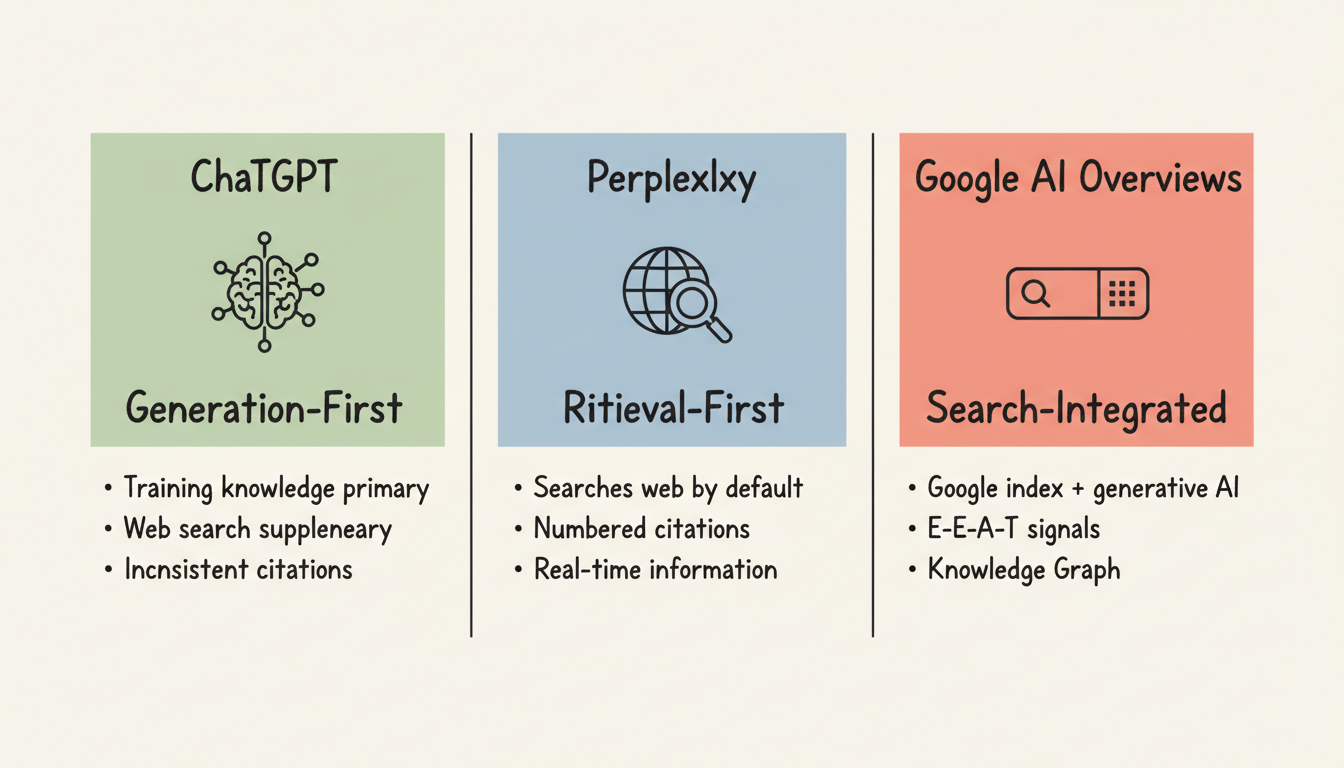

Not all answer engines work identically. Their architectures determine citation behavior, which makes understanding ai-search-ranking-factors essential for content optimization.

ChatGPT operates primarily as a "generation-first" system. It generates responses mainly from its trained knowledge (parametric memory), adding web search when explicitly needed or when queries require current information.

Key characteristics:

When ChatGPT does retrieve external information, it evaluates sources based on authority signals, content clarity, and relevance to the query. For businesses looking to improve their ChatGPT SEO presence, understanding this generation-first approach is critical.

Perplexity operates as a "retrieval-first" system. It searches the web by default for every query, then synthesizes findings into structured answers with persistent citations.

Key characteristics:

Perplexity's architecture makes traditional SEO fundamentals directly relevant—content that ranks well in web search becomes available for Perplexity to cite. Organizations focusing on Perplexity AI search optimization can leverage this retrieval-first behavior to improve citation rates.

Google AI Overviews combine Google's search infrastructure with generative AI. They pull from Google's indexed web content, evaluate sources using established search quality signals, and generate synthesized summaries.

Key characteristics:

AI systems evaluate multiple factors when deciding which sources to cite:

Your content needs structured data that tells AI systems exactly what each piece of information represents. JSON-LD schema markup helps answer engines parse and understand content meaning.

Without proper structure, AI systems may understand your content less accurately—or skip it entirely when assembling responses. Using person schema knowledge graph markup can help establish entity relationships that AI systems recognize.

AI models prefer concise, fact-based answers they can extract directly. Research shows that a 40-60 word direct answer beats a 500-word narrative for citation probability.

The answer-first format works because AI systems scan for extractable statements. When your key insight is buried in paragraph eight, it may never surface in AI responses.

Answer engines evaluate domain authority, content freshness, and consistency across multiple sources. When multiple authoritative sources echo the same information, AI systems gain confidence in citing that data.

Authority signals include:

AI systems verify that entities (brands, people, organizations) exist and are credible. They cross-reference information across Wikipedia, Wikidata, business directories, and other authoritative databases.

If your entity information is inconsistent across the web, AI systems may trust your content less—even if the content itself is accurate.

RAG technology continues evolving. Industry analysts describe a shift from "Retrieval-Augmented Generation" toward "Context Engines" with intelligent retrieval as the core capability.

Where RAG is heading:

For content creators, this evolution means structured, authoritative content becomes even more valuable. As AI systems get smarter about evaluating sources, quality signals matter more—not less.

Understanding answer engine technology reveals optimization priorities:

Structure content for extraction: Use clear headings, direct answer paragraphs, and schema markup that helps AI systems parse your content accurately.

Build verifiable authority: Establish consistent entity information across the web. Make it easy for AI systems to verify your credibility.

Optimize for both retrieval and generation: Retrieval-first systems (Perplexity) reward strong SEO. Generation-first systems (ChatGPT) reward comprehensive, authoritative content in their training data.

Maintain freshness: Real-time retrieval systems favor current content. Update important pages regularly to remain citable.

Some do. Perplexity and other retrieval-first systems often leverage search engine results to find content. This means traditional SEO directly impacts visibility in these platforms. ChatGPT's browsing feature also uses search, though it's not always engaged.

AI systems evaluate content structure, source authority, information freshness, and entity consistency. They look for clear, extractable answers from trustworthy sources. The specific weighting varies by platform and query type.

Core practices—structured data, clear answers, authority signals—benefit all platforms. But platform-specific tactics matter: Perplexity rewards strong SEO and freshness, while ChatGPT rewards comprehensive authority that influences training data.

By submitting this form, you agree to our Privacy Policy and Terms & Conditions.