Optimizing content for generative AI differs from general content optimization. Generative platforms—ChatGPT, Claude, Perplexity—synthesize information differently than Google's AI Overviews. They don't just extract snippets; they reconstruct answers using information from multiple sources.

This workflow guides you through auditing, prioritizing, and optimizing content specifically for generative AI citation.

Large language models trained on web content develop preferences for certain source types:

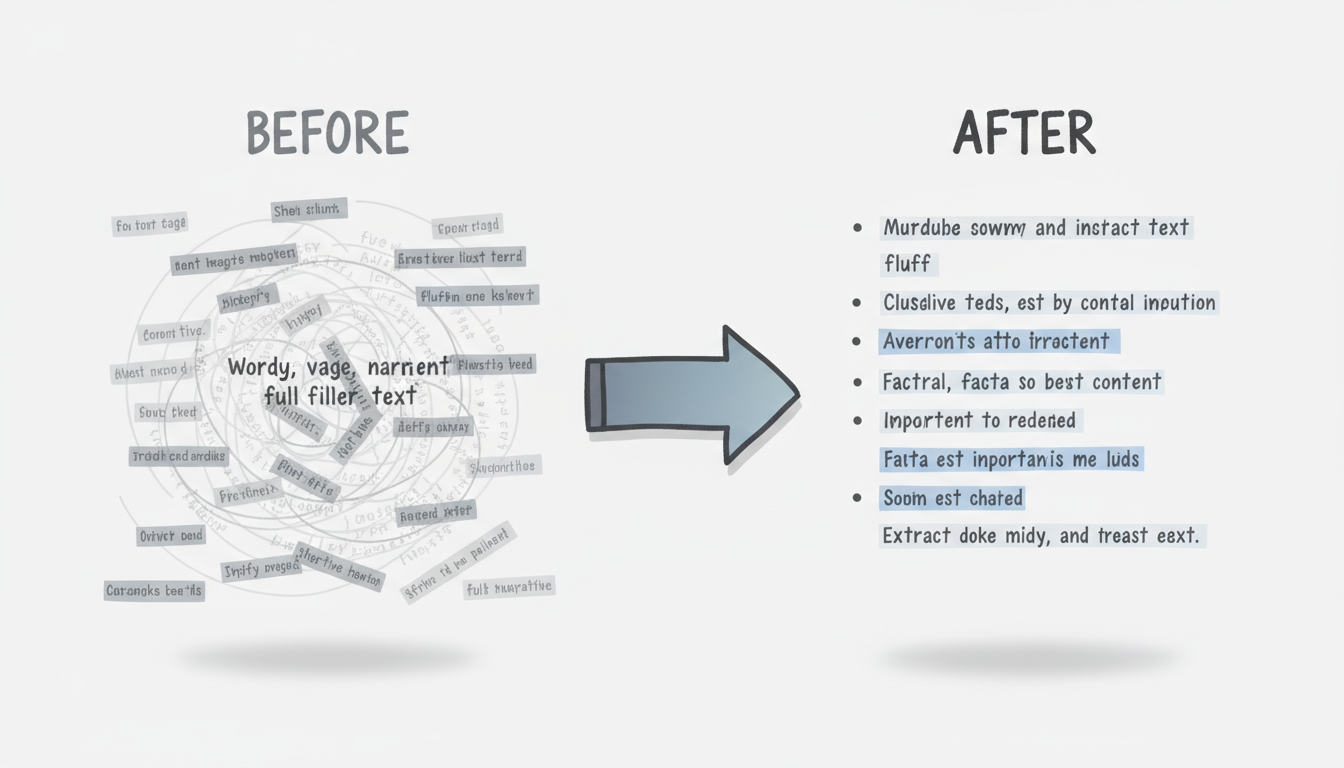

Information density matters. LLMs favor content packing specific facts, data, and definitions into concise statements. Padding and filler dilute citation potential.

Authority signals persist. Content from recognized experts, established publications, and well-cited sources gets weighted higher in training data.

Clarity trumps cleverness. Straightforward explanations outperform content using excessive jargon, metaphors, or indirect language. LLMs need unambiguous information.

Factual consistency builds trust. Information appearing consistently across authoritative sources gets cited with higher confidence.

Understanding limitations reveals optimization opportunities:

Audit existing content against generative AI criteria rather than SEO metrics. When evaluating your content library, consider using aeo-checker-tools to systematically assess readiness for AI citation.

Audit checklist per page:

Question | Poor | Adequate | Strong |

Can paragraphs stand alone when extracted? | Run-on narratives | Some standalone | All paragraphs work alone |

Does content state specific facts? | Vague generalizations | Some specifics | Data throughout |

Is language direct and clear? | Jargon-heavy | Mixed | Plain language |

Are claims verifiable? | Opinions only | Some sourced | Facts with attribution |

Scoring system:

Focus transformation effort on high-value content scoring 5-8.

Not all content warrants optimization. Prioritize based on:

Topic suitability: Generative AI users ask informational questions. Transactional, navigational, and purely promotional content rarely earns citations.

Query alignment: Test whether real users ask generative AI questions your content could answer. Query ChatGPT or Perplexity with relevant prompts to assess.

Competitive gaps: Identify topics where competitors get cited but you don't. These represent immediate opportunities.

Business value: Prioritize topics that drive leads, sales, or brand awareness when cited.

Priority matrix:

Low Query Volume | High Query Volume | |

High Business Value | Selective optimization | Top priority |

Low Business Value | Skip | Consider optimizing |

Transform prioritized content systematically. Before restructuring, understanding the foundational differences between seo-vs-aeo-key-differences helps inform which elements to prioritize during transformation.

Step 1: Identify citation opportunities

Review content for passages that could answer common questions. Mark sections with high citation potential.

Step 2: Restructure paragraphs

Convert narrative paragraphs into extractable statements.

Before: "When it comes to choosing the right approach, there are several factors worth considering. Many professionals in the field have different opinions, but generally speaking, most would agree that taking the time to evaluate options carefully tends to produce better results."

After: "Effective approach selection requires evaluating three factors: implementation complexity, resource requirements, and expected outcomes. Evaluating these factors systematically produces measurably better results than intuitive selection."

Step 3: Add factual anchors

Insert specific data, statistics, and concrete examples. Generative AI cites specifics over generalizations.

Step 4: Create definition blocks

LLMs frequently need clear definitions. Add explicit definitions for key terms:

"[Term] is [clear definition]. [Additional context in one sentence]."

Place definitions near first mention of important concepts.

Step 5: Build answer sections

Structure content to directly answer anticipated questions:

## What Is [Topic]?

[Direct answer in first sentence]. [Supporting context]. [Specific example or data point].This format matches how generative AI surfaces information in responses.

After content transformation, verify technical elements support generative AI access. Implementing technical-aeo-optimization best practices ensures AI crawlers can efficiently access and process your optimized content.

Crawler access:

Page structure:

Load performance:

Test optimization results against actual generative AI behavior.

Testing protocol:

Refinement actions:

Stuffing every paragraph with keywords and statistics creates unnatural content. Generative AI recognizes manipulation patterns.

Guideline: Content should read naturally when spoken aloud. If it sounds robotic, you've over-optimized.

Never simplify nuanced information into misleading statements. Inaccurate citations damage credibility when users verify information.

Guideline: Preserve accuracy even when restructuring. Complexity requiring context should retain that context.

Generative AI training data has cutoffs. Content optimized once but never updated becomes invisible as models retrain on newer sources.

Guideline: Update high-priority content quarterly. Add visible timestamps indicating freshness.

ChatGPT, Claude, and Perplexity use different models with different preferences. Optimizing only for ChatGPT misses other platforms. Organizations developing a multi-platform-ai-search-strategy recognize that each generative AI platform requires tailored optimization approaches.

Guideline: Test across multiple generative platforms and optimize for common citation patterns.

Track generative AI performance separately from traditional SEO metrics.

Primary metrics:

Secondary metrics:

Review metrics monthly to guide ongoing optimization.

By submitting this form, you agree to our Privacy Policy and Terms & Conditions.