Traditional SEO dashboards fail to capture the metrics that matter in AI search. Citation frequency, LLM referral traffic, and brand mention sentiment require new measurement approaches that most analytics platforms don't natively support. Designing an effective AI search analytics dashboard means identifying which metrics to track, how to collect them, and how to visualize insights that drive optimization decisions.

AI search visibility operates differently than traditional organic search, requiring evolved measurement frameworks.

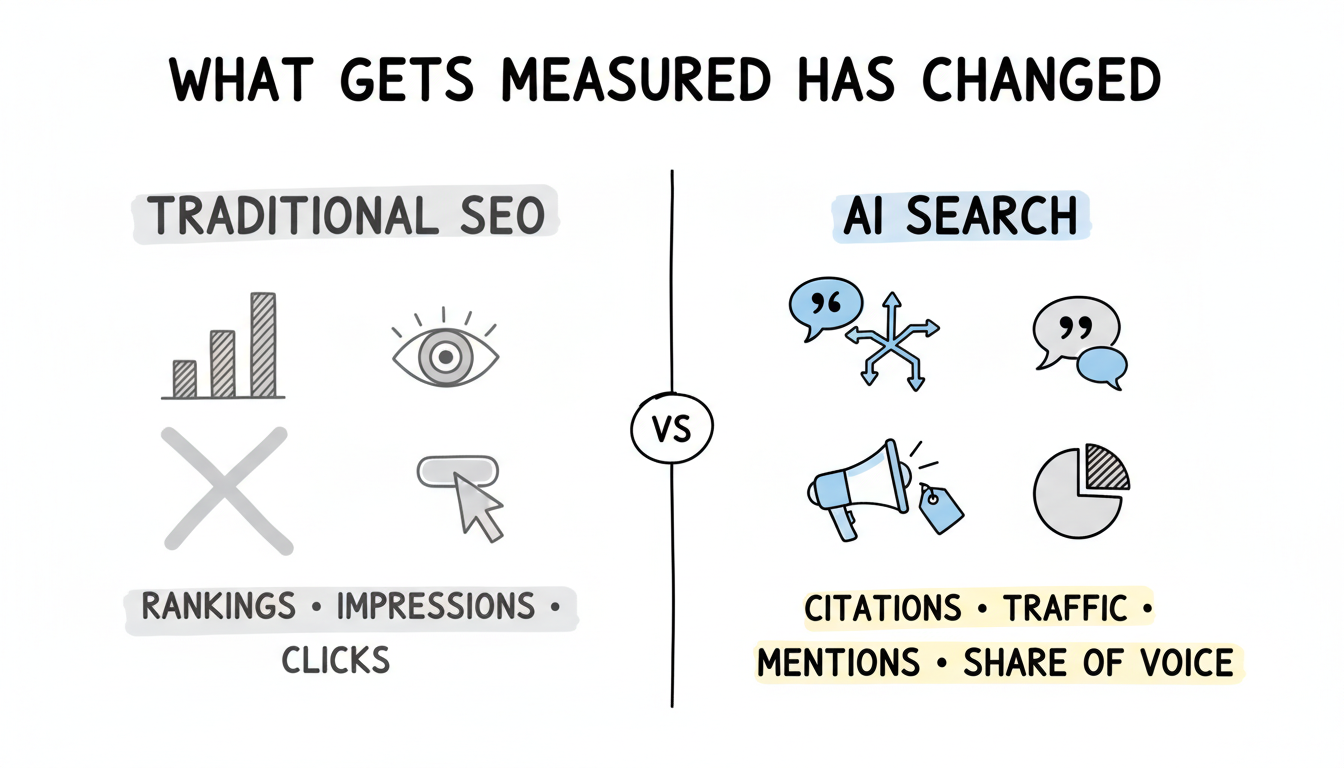

Why traditional dashboards fall short: Standard SEO dashboards track rankings, impressions, and clicks—metrics that don't translate directly to AI search performance. When ChatGPT cites your content, no traditional ranking exists. When Perplexity references your page, the citation context matters as much as the citation itself. Traditional tools weren't built for this reality.

The new measurement requirements:

Research indicates that ChatGPT drives 89% of measured AI referral traffic to websites, while Perplexity shows 6.2x higher referral efficiency—meaning Perplexity users click through to cited sources at dramatically higher rates. These platform-specific patterns require dashboard designs that capture nuance rather than aggregate everything into single metrics.

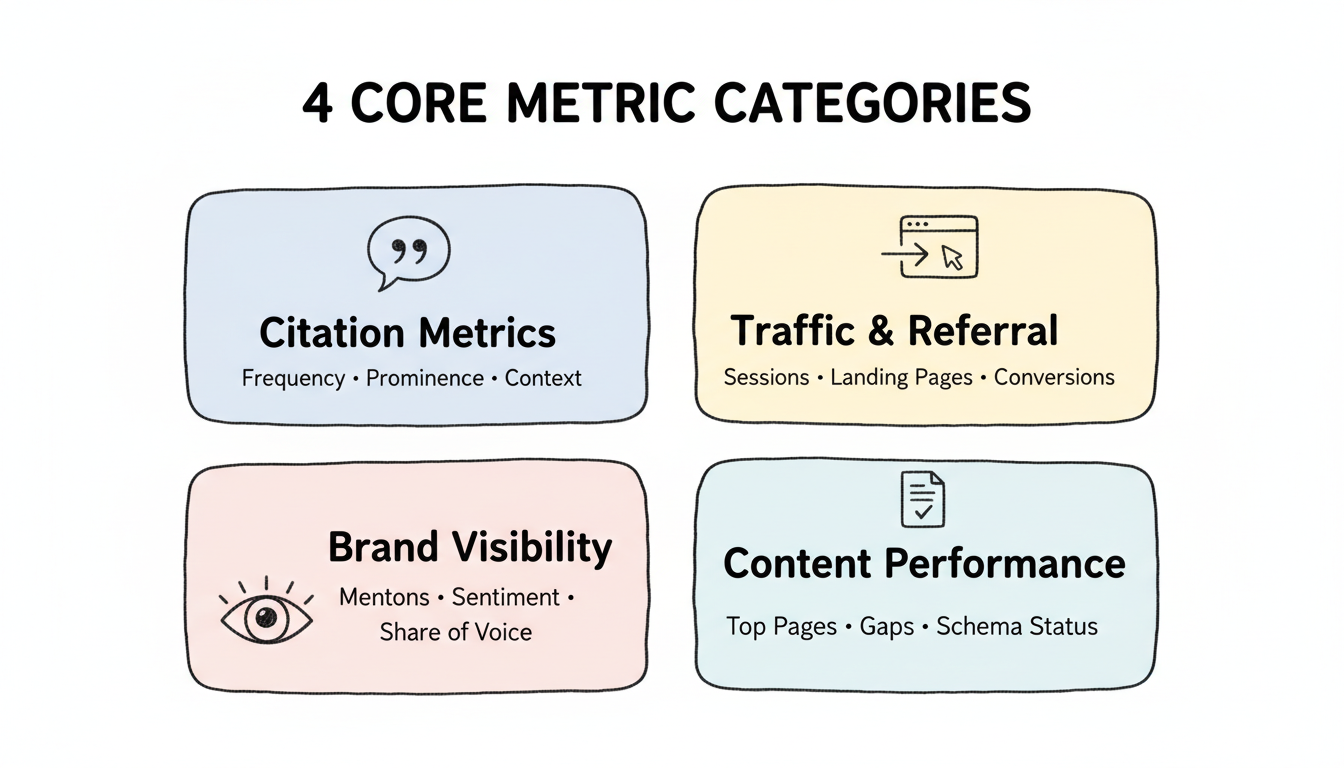

An effective AI search dashboard integrates four metric categories.

Track how often and where AI platforms reference your content.

Key citation metrics:

Platform-specific tracking: Different platforms require different monitoring approaches. Google AI Overviews citations can be tracked through Search Console with AI Overview filters. ChatGPT and Perplexity citations require specialized monitoring tools or API access for systematic tracking. Understanding Google AI Overview international and regional variations helps contextualize citation patterns across different markets.

Measure actual visits from AI search sources.

Traffic metrics:

Attribution challenges: Not all AI referral traffic identifies itself clearly. Some ChatGPT traffic appears as direct visits. Implement UTM parameters where possible and use referral source analysis to estimate unattributed AI traffic.

Monitor brand mentions in AI responses beyond direct citations.

Brand metrics:

Share of voice calculation: Measure what percentage of AI responses for your target topics mention your brand versus competitors. This share of voice metric indicates relative visibility in the AI search landscape. Comprehensive AEO marketing audits often reveal gaps between perceived brand authority and actual AI search visibility.

Understand which content earns AI visibility.

Content metrics:

Structured data like FAQ schema and HowTo schema can significantly improve AI platform citation rates when properly implemented.

Structure your dashboard for actionable insights rather than data overload.

Executive summary view: Present high-level KPIs at the top: total citations across platforms, AI referral traffic, citation share versus competitors, and month-over-month trends. This summary lets stakeholders quickly assess overall AI search health.

Platform-specific sections: Create dedicated views for each major platform. Google AI Overviews performance differs significantly from ChatGPT visibility, which differs from Perplexity citation patterns. Platform sections enable targeted optimization decisions.

Trend analysis: AI search visibility fluctuates significantly—research shows 40-60% of domains cited for any given query change within one month. Trend visualization helps distinguish signal from noise and identifies patterns requiring action. Tracking content freshness signals becomes critical for understanding citation volatility.

Competitive comparison: Include competitor benchmarking throughout the dashboard. Your citation frequency means little without context about competitor performance on the same queries.

Multiple data sources feed a comprehensive AI search dashboard.

Native platform data:

Third-party monitoring tools: Several platforms now offer AI citation monitoring: Profound (recognized as a leader in AEO analytics for 2026), Otterly.AI, and LLMrefs provide citation tracking across platforms. BrightEdge, Ahrefs, and Semrush have added AI search features to their suites.

Custom monitoring: For comprehensive coverage, many organizations implement custom monitoring through AI platform APIs or systematic response sampling. Custom approaches capture nuances that third-party tools may miss.

Data integration: Use a data warehouse or integration platform to combine sources into a unified dashboard. Looker Studio, Tableau, or dedicated SEO reporting platforms can aggregate disparate data sources.

Effective visualization transforms data into decisions.

Time-series charts: Plot citation frequency and traffic over time. Use consistent date ranges across metrics for trend comparison. Include benchmark lines showing competitor performance or industry averages.

Platform comparison charts: Side-by-side platform performance visualization helps identify where optimization efforts should focus. Bar charts comparing citation frequency by platform reveal relative strengths and weaknesses.

Topic heatmaps: Visualize citation performance across topic categories using heatmaps. This reveals which content areas earn strong AI visibility and where gaps exist.

Alert thresholds: Set visual thresholds that highlight concerning changes. Significant citation drops or traffic declines should stand out immediately rather than hiding in dense data tables.

Different stakeholders need different views and frequencies.

Executive reporting (monthly): High-level KPIs, strategic trends, competitive positioning. Focus on business impact rather than tactical details. Answer: "How is our AI search visibility affecting business goals?"

Marketing team reporting (weekly): Platform-specific performance, content performance, optimization opportunities. Enable tactical decisions about content updates and prioritization.

Technical team reporting (continuous): Schema validation status, crawl accessibility, structured data errors. Technical issues require immediate visibility for quick resolution.

Cross-functional alignment: AI search visibility involves content, technical, and brand teams. Dashboard design should facilitate cross-functional discussions rather than siloing data by department.

Avoid these frequent errors in AI search dashboard design.

Vanity metric focus: Citation counts without context mislead. A hundred citations in irrelevant contexts matter less than ten citations for high-intent commercial queries. Weight metrics by business impact.

Platform aggregation: Combining all AI platforms into single metrics obscures platform-specific patterns. What works for Google AI Overviews may fail for ChatGPT. Maintain platform granularity.

Ignoring competitive context: Absolute metrics without competitive benchmarks provide false confidence. Your citations may be increasing while competitors' grow faster. Always include competitive comparison.

Static dashboards: AI search evolves rapidly. Dashboards designed for 2025's landscape may miss 2026's important metrics. Build flexible architectures that accommodate new platforms and metrics.

Insufficient historical depth: Short time windows hide meaningful trends. Maintain at least 12 months of historical data to distinguish seasonal patterns from actual performance changes.

Build your dashboard incrementally rather than attempting comprehensive coverage immediately.

Phase 1: Foundation Start with available data: Search Console AI Overview metrics, referral traffic segmentation, and manual citation sampling. This foundation provides immediate value while more sophisticated tracking develops.

Phase 2: Expansion Add third-party monitoring tools for broader citation coverage. Implement competitive tracking and brand mention monitoring. Expand to additional platforms as tools mature.

Phase 3: Optimization Build custom integrations for unique measurement needs. Develop attribution models for AI-influenced conversions. Create predictive indicators for citation likelihood.

Start with Google Search Console and Google Analytics 4 for foundational data. Add specialized tools like Profound, Otterly.AI, or platform features from Semrush and Ahrefs for citation monitoring. Use Looker Studio or similar visualization platforms to integrate sources into unified dashboards.

Weekly tactical reviews identify emerging issues and optimization opportunities. Monthly strategic reviews assess trends and competitive position. Daily monitoring for high-priority alerts catches critical issues quickly. Balance review frequency against resource availability and business impact.

No single metric captures AI search performance comprehensively. Citation frequency indicates visibility, but referral traffic measures actual impact. Share of voice shows competitive position. The most important metric depends on business goals—brand awareness programs prioritize mentions, while lead generation focuses on referral conversions.

By submitting this form, you agree to our Privacy Policy and Terms & Conditions.