AI search has created a parallel visibility surface where different rules determine winners and losers. Organizations excelling in traditional SEO may find themselves invisible in AI responses while smaller competitors earn prominent citations. Competitive benchmarking for AI search requires new methodologies that measure citation share, track visibility gaps, and identify optimization opportunities across platforms where competitors may already hold advantages.

AI search changes competitive dynamics in ways that demand systematic monitoring.

The new competitive reality: Traditional search rankings provided clear competitive visibility—you could see exactly who ranked above and below you. AI search obscures this visibility. Your competitors may appear in AI responses for queries where you're completely absent, and you won't know without active monitoring.

The stakes of invisibility: Research from the 2026 AEO/GEO Benchmarks Report reveals that AI-powered discovery now represents a parallel surface of visibility where AI systems decide which brands appear—and which are left out of the conversation entirely. Organizations without competitive benchmarking operate blind to threats until revenue impact becomes undeniable.

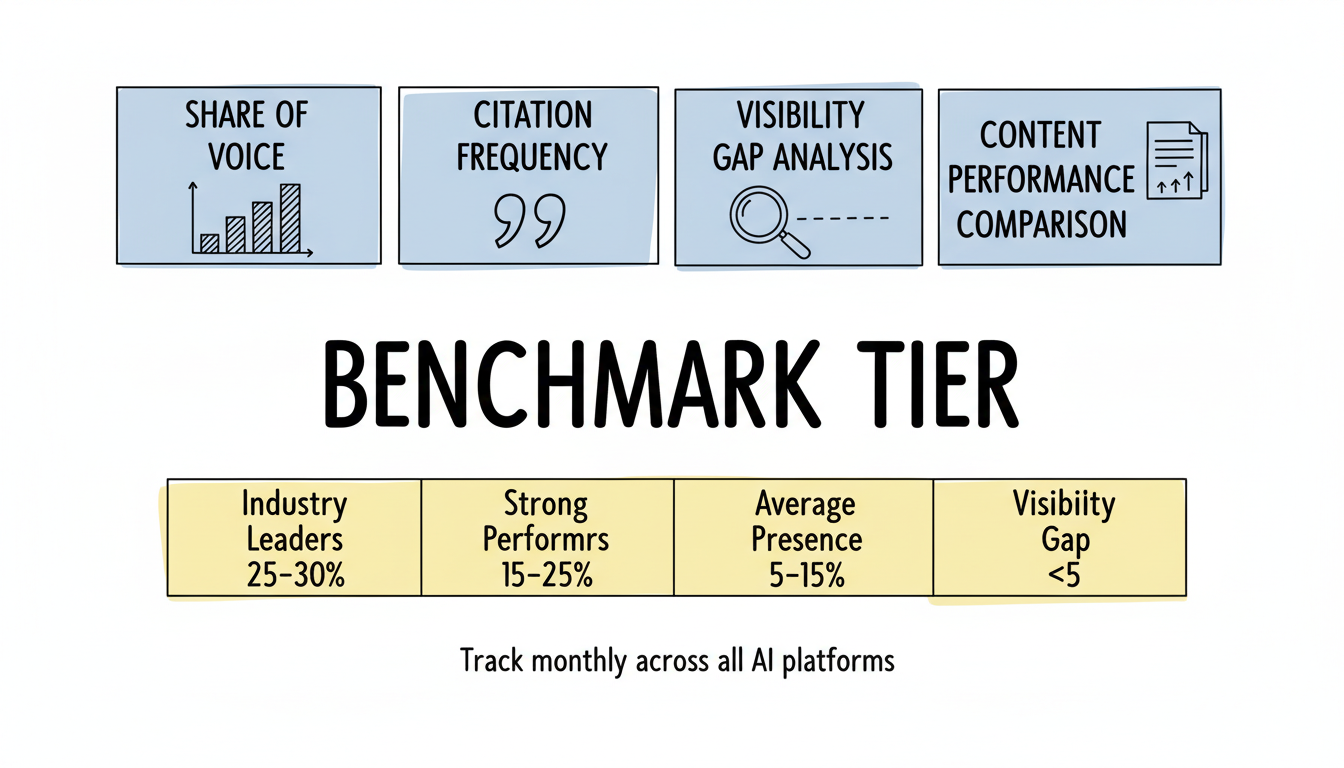

Early mover advantage: Top-performing brands capture 15% or greater share of voice across core query sets, with enterprise leaders reaching 25-30% in specialized verticals. These positions become increasingly difficult to displace as AI systems learn to trust established citation sources.

Track these metrics to understand competitive positioning across AI search.

Share of voice measures the percentage of AI responses mentioning your brand versus total responses for target queries.

How to calculate: Sample a consistent set of queries across platforms monthly. Record how many responses cite or mention your brand versus competitor brands. Calculate your percentage of total mentions for each query set.

Benchmarks by position:

Platform variation: Share of voice varies significantly by platform. A brand dominating Google AI Overviews may have minimal ChatGPT presence. Track share separately for each major platform to identify platform-specific competitive gaps.

Monitor how often each competitor's content earns citations in AI responses.

Citation tracking elements:

Competitive patterns: Identify which competitors consistently earn citations you don't. Analyze their cited content characteristics—length, structure, authority signals—to understand what differentiates their approach. Understanding schema validation and testing for AI search can help identify technical advantages competitors may hold.

Map queries where competitors appear and you don't.

Gap identification process:

Gap prioritization: Not all gaps matter equally. Prioritize closing gaps on high-value commercial queries over informational queries with limited conversion potential.

Understand why competitor content earns citations yours doesn't.

Analysis dimensions:

Actionable insights: Don't just identify differences—translate observations into optimization priorities. If competitors earning citations use more structured formatting, implement similar structures. If their content runs 50% longer, evaluate whether depth expansion makes sense for your topics. Implementing AI SEO best practices for 2026 can help close performance gaps systematically.

Implement systematic processes for ongoing competitive intelligence.

Build representative query sets for consistent benchmarking.

Query selection criteria:

Query set size: Start with 50-100 queries for manageable initial benchmarking. Expand to 200-500 queries as processes mature. The 2026 AEO/GEO Benchmarks Report analyzed 3.5 million unique prompts—enterprise-scale benchmarking requires substantial query coverage.

AI responses vary, requiring systematic sampling approaches.

Sampling considerations:

Frequency cadence: Monthly benchmarking captures meaningful trends without excessive resource consumption. Increase frequency during active optimization campaigns or competitive threat situations.

Choose competitors strategically for meaningful benchmarking.

Competitor categories:

Competitor count: Track 5-10 competitors for practical benchmarking. More competitors provide broader perspective but increase monitoring complexity.

Multiple platforms support competitive AI benchmarking.

Dedicated AI visibility tools:

Tool selection criteria:

Manual verification: Even with tools, validate findings through manual sampling. Tools may miss nuances or misclassify mentions. Periodic manual checks ensure tool accuracy. Understanding different AI-powered search engines helps inform which platforms require the most rigorous competitive monitoring.

Benchmarking data only matters when it drives optimization decisions.

Convert competitive insights into prioritized action.

High priority actions:

Medium priority actions:

Lower priority actions:

Develop content specifically to address competitive gaps.

Gap-closing content: When competitors earn citations you don't, analyze why. Create or upgrade content addressing the specific characteristics that differentiate their cited content—whether depth, structure, authority, or freshness.

Defensive content: For queries where you hold strong positions, maintain advantage through regular updates, authority reinforcement, and structural optimization. Competitors will target your successful queries. Tracking AEO metrics and KPIs helps identify when defensive action is needed.

Offensive content: Identify queries where no competitor dominates. First-mover advantage in AI search can establish citation patterns that persist as AI systems learn which sources to trust.

Share competitive intelligence across stakeholders.

Executive reporting: Summarize share of voice trends, major gap movements, and competitive threats. Connect benchmarking data to business outcomes—lost visibility correlates with revenue vulnerability.

Team reporting: Provide detailed gap analysis and content recommendations. Enable content and technical teams to act on competitive insights with specific guidance.

Continuous monitoring: AI search visibility fluctuates significantly—research indicates 40-60% of domains cited for any query change within one month. Establish alert thresholds for significant competitive movements requiring immediate attention.

Avoid these errors that undermine competitive intelligence value.

Insufficient query coverage: Small query sets miss competitive movements on important queries. Build comprehensive sets covering your full topic landscape.

Infrequent sampling: Quarterly benchmarking misses rapid competitive changes. Monthly minimum ensures timely competitive awareness.

Platform aggregation: Combining all platforms into single metrics obscures platform-specific competitive dynamics. Maintain platform granularity in competitive tracking.

Static competitor sets: Competitive landscapes evolve. Review competitor selections quarterly and add emerging competitors gaining AI visibility.

Analysis without action: Benchmarking reports that don't drive optimization waste resources. Every benchmarking cycle should produce prioritized action items.

Start with 5-10 competitors covering direct, indirect, and content competitors. More competitors provide broader perspective but increase monitoring complexity. Prioritize competitors most active in AI responses for your target queries over those dominant only in traditional search.

Monthly benchmarking captures meaningful trends while remaining resource-practical. Increase frequency to weekly during active campaigns or when responding to competitive threats. Quarterly benchmarking misses too much competitive movement in the rapidly evolving AI search landscape.

Industry leaders achieve 25-30% share on core queries, with strong performers at 15-25%. Realistic targets depend on competitive intensity in your space and current positioning. Start by establishing baseline measurements, then set improvement targets based on gap analysis and resource availability.

By submitting this form, you agree to our Privacy Policy and Terms & Conditions.