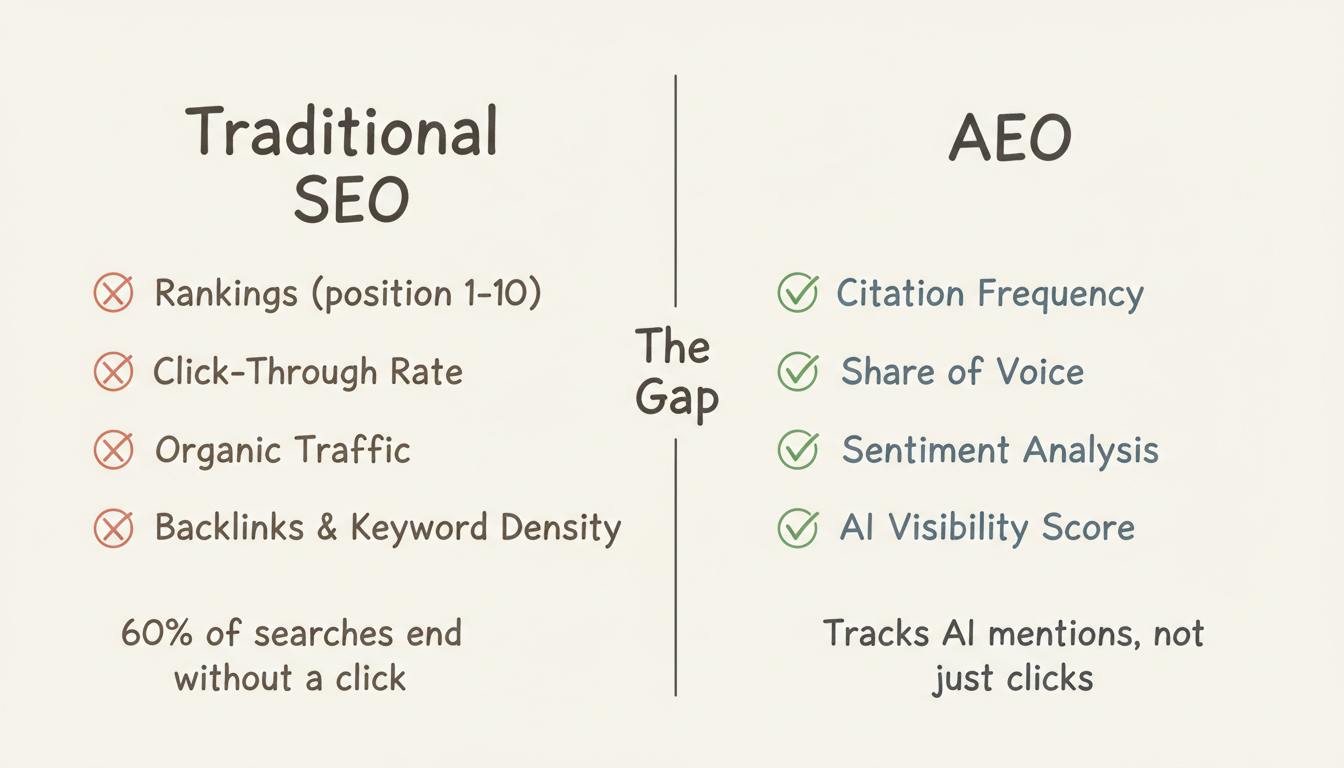

AEO metrics and KPIs require fundamentally different approaches than traditional SEO measurement. While SEO tracks rankings, click-through rates, and organic traffic, Answer Engine Optimization measures how often AI platforms cite your content, how they describe your brand, and whether those mentions influence business outcomes.

This guide provides a comprehensive framework for measuring AEO success, covering the essential metrics, tracking methodologies, and tools needed to quantify your visibility across ChatGPT, Perplexity, Google AI Overviews, and other AI platforms.

Traditional SEO metrics don't capture AI visibility effectiveness. Understanding this gap clarifies what AEO measurement requires, particularly as AI SEO best practices in 2026 continue to evolve beyond conventional ranking signals.

AI platforms often provide complete answers without sending users to websites. When ChatGPT answers a question citing your brand, or Perplexity references your content in a synthesized response, traditional click-based metrics miss this entirely. Research shows 60% of searches now end without clicks—a dramatic increase from 26% just two years ago.

What traditional metrics miss:

Research analyzing citation patterns across AI platforms reveals that classic SEO metrics show weak correlations with AI citations. Domain Rating shows the highest correlation with ChatGPT citations at 0.161, while factors like backlinks and keyword density show negative correlations. Understanding these nuances is critical when implementing generative engine optimization strategies that differ from traditional approaches.

Citation correlation insights by platform:

This data confirms that AEO requires distinct measurement approaches, which is why many organizations turn to AEO implementation services to properly establish tracking frameworks.

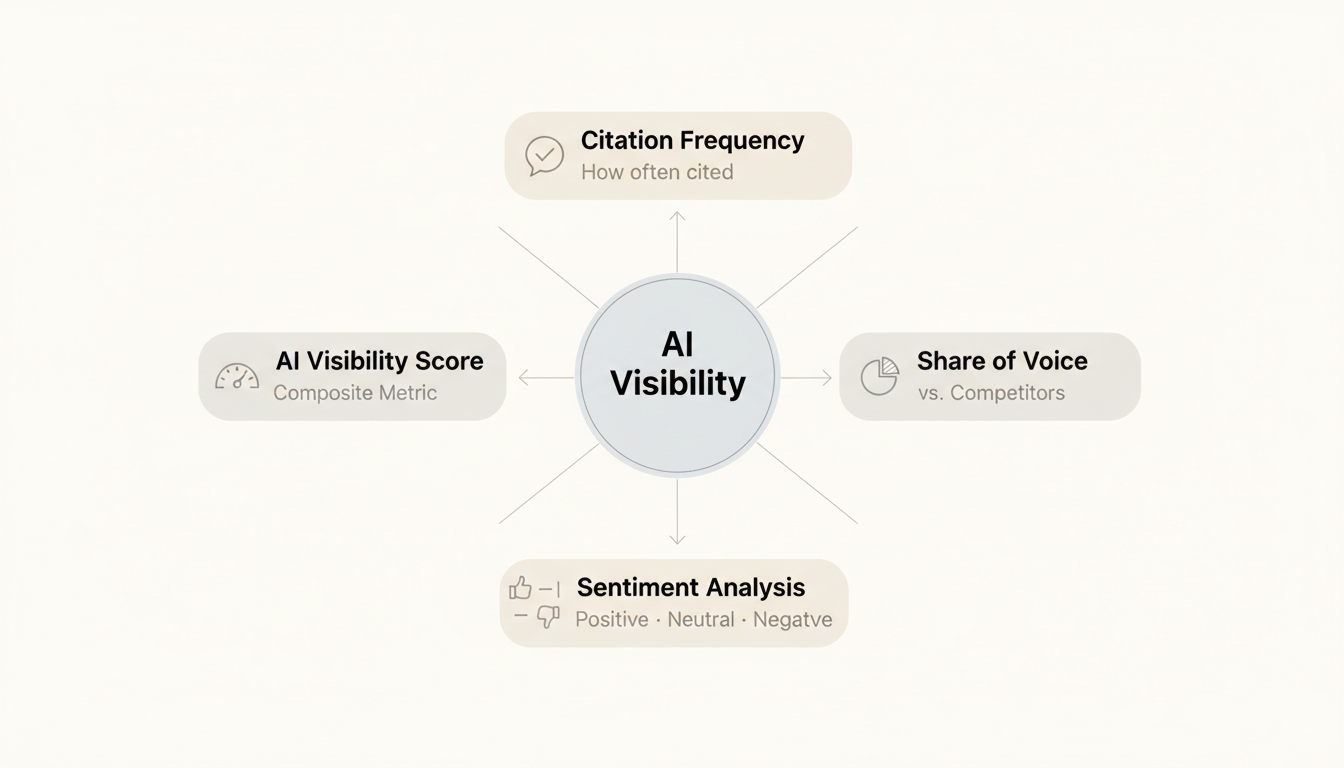

Four primary metrics form the foundation of AEO measurement.

Citation frequency measures how often AI platforms reference your content or brand when answering relevant queries. This is the closest equivalent to traditional rankings in the AI search context.

What to measure:

Tracking approach: Develop a priority query set covering your core topics—typically 50-100 queries per product line or topic area. Query AI platforms systematically and document when your brand or content appears in responses. Understanding how to check if your site appears in Google AI Overviews provides a starting point for this monitoring.

Benchmarking: Compare citation frequency against previous periods to establish trends. Also benchmark against competitors to understand relative visibility.

Share of voice measures your citation presence relative to competitors for the same query sets. When users ask AI about your category, how often does your brand appear compared to alternatives?

What to measure:

Why it matters: A brand earning citations in 40% of category queries holds a significant visibility advantage over competitors appearing in 15%. Share of voice reveals competitive dynamics traditional SEO metrics don't capture, highlighting the fundamental SEO vs AEO key differences that measurement must account for.

AI platforms don't just mention brands—they describe them. Sentiment analysis measures whether those descriptions position you favorably, neutrally, or negatively.

What to measure:

Tracking approach: Review the actual text of AI responses mentioning your brand. Document the context: Are you recommended? Mentioned with caveats? Described accurately? Sentiment analysis reveals perception patterns beyond simple presence, which ties directly to AI overview authority signals that influence how platforms present your brand.

AI visibility score provides a composite metric combining citation frequency, sentiment, and positioning into a single benchmark. Think of it as your overall "AI market share."

Components typically included:

Using visibility scores: Track visibility scores monthly to identify trends. Compare against competitors to understand relative positioning. Use score changes to evaluate optimization effectiveness, which becomes particularly important when choosing between AEO optimization software solutions.

Beyond core metrics, secondary measures provide deeper insight into AEO performance.

Not all citations are equal. Citation quality measures the depth and impact of your AI visibility, particularly when implementing AEO optimization techniques for conversion.

Quality indicators:

High-quality citations drive greater influence than passing mentions.

Platform coverage tracks which AI platforms cite your content and identifies gaps in visibility. This becomes especially important when evaluating platforms like Perplexity AI-powered search engines alongside traditional options.

Key platforms to monitor:

Inconsistent coverage across platforms signals optimization opportunities.

Query expansion measures whether your citation presence is growing across new query types beyond your initial target set.

What to track:

Growing query coverage indicates increasing AI recognition of your authority, which often requires strategic application of generative AI for structured data to maximize discoverability.

Source attribution identifies which specific content earns citations and which gets overlooked.

What to analyze:

Understanding which content performs guides optimization priorities and informs decisions around off-page AEO optimization investments.

Measurement without business connection produces data without value. Attribution links AEO metrics to outcomes that matter, demonstrating the practical AEO vs digital marketing impact.

Where trackable, AI platforms send direct traffic. Google Analytics 4 can segment traffic from AI sources.

Traffic sources to segment:

What to measure:

Research indicates AI referral traffic often shows higher intent and engagement than traditional search traffic, which factors into AI search conversion funnel optimization strategies.

AI visibility influences brand search behavior. Users discovering your brand through AI mentions often search directly afterward.

Correlation tracking:

Direct surveys provide attribution data analytics can't capture, offering insight that complements AI SEO software ROI testing methodologies.

Survey approaches:

Survey data reveals AI's role in customer journeys that don't generate trackable clicks.

AI-discovered leads may exhibit different characteristics than other sources.

Quality metrics to compare:

Understanding quality differences justifies AEO investment, particularly when evaluating enterprise vs SMB AEO strategy approaches.

Several tool categories support AEO measurement. Selecting the right platform often benefits from consulting an AEO agency selection guide to understand tool-agency integration.

Purpose-built platforms provide systematic AI visibility tracking.

Enterprise options:

Mid-market options:

Entry-level options:

For detailed pricing comparisons, review comprehensive AI SEO tools pricing analysis.

Major SEO platforms have added AI visibility capabilities.

Integrated options:

These provide unified traditional and AI visibility measurement, which helps address platform tactics conflict resolution when managing both channels.

Budget-constrained teams can implement manual monitoring, often complemented by tools from the free AI SEO tech stack.

Manual process:

Manual monitoring provides visibility insight without tool investment.

Effective AEO programs require clear KPIs aligned to business objectives. Understanding the clear definitions AEO advantage helps establish meaningful targets.

Citation frequency targets:

Share of voice targets:

Sentiment targets:

Citation quality targets:

Traffic KPIs:

Brand KPIs:

AEO KPIs require appropriate review frequency, particularly when implementing comprehensive LLM optimization guides.

Recommended cadence:

Learning from measurement failures improves tracking effectiveness.

Small query sets produce unreliable data. AI responses vary significantly—tracking 10-20 queries creates noise that obscures trends.

Fix: Track 50-100 queries per major topic area for statistically meaningful patterns.

Citation counts without sentiment context miss crucial insight. A brand mentioned frequently but negatively faces different challenges than one mentioned rarely but positively.

Fix: Include sentiment analysis in all citation tracking.

Focusing on single platforms misses the full picture. ChatGPT visibility doesn't guarantee Perplexity visibility. The AEO vs GEO SEO quick comparison illustrates why multi-platform measurement matters.

Fix: Track across multiple platforms to identify coverage gaps.

Visibility metrics without business connection produce activity metrics rather than results metrics, which becomes particularly problematic when evaluating AEO marketing examples for ROI justification.

Fix: Invest in attribution approaches—even imperfect attribution beats none.

Assuming SEO success translates to AEO success leads to misallocation. High-ranking content may not earn AI citations, especially when comparing what is GEO search engine optimization versus AEO approaches.

Fix: Measure AEO independently rather than inferring from SEO metrics.

Implement AEO measurement progressively. For location-based businesses, also review how to do GEO SEO for complementary measurement frameworks.

Phase 1: Foundation (Week 1-2)

Phase 2: Systematic Tracking (Month 1-2)

Phase 3: Attribution Development (Month 2-4)

Phase 4: Optimization Loop (Ongoing)

Weekly quick checks for major queries catch significant changes. Monthly comprehensive reviews provide trending data. Quarterly evaluations assess strategy effectiveness and adjust KPIs.

Benchmarks vary significantly by industry and competition. Establish your current baseline, then target 50-100% improvement over 6 months for meaningful progress. Compare against competitors rather than absolute standards.

Yes. Manual monitoring through systematic queries and spreadsheet tracking provides valuable insight without tool investment. Paid tools offer automation and efficiency but aren't required for basic measurement.

Combine multiple attribution approaches: segment AI referral traffic in analytics, include AI discovery options in conversion surveys, correlate branded search growth with visibility changes, and track lead quality differences for AI-attributed sources.

By submitting this form, you agree to our Privacy Policy and Terms & Conditions.